As you navigate the rapidly evolving AI landscape, understanding how to effectively communicate with large language models (LLMs) is becoming an important skill. Whether you’re a curious beginner or an aspiring AI developer, mastering the art of prompting can significantly enhance your ability to harness the power of AI. In fact, crafting the right prompt can make all the difference in getting accurate, relevant, and valuable responses from AI systems. This comprehensive guide will take you from zero to hero in prompt engineering, equipping you with the knowledge and techniques to get the most out of your AI interactions. For inspiration, check out The Art of AI Prompt Crafting: A Comprehensive Guide for Enthusiasts, and get ready to unlock the full potential of AI.

Understanding Prompts

For effective communication with large language models, it’s crucial to craft well-structured and clear prompts that guide the AI to produce the desired output. In this chapter, we’ll research into the characteristics of good prompts, explore examples of good and bad prompts, and discuss the importance of understanding prompts in AI interactions.

Characteristics of a Good Prompt

Now, let’s examine the key characteristics of a good prompt. A good prompt should possess the following qualities:

A good prompt is clear and unambiguous, leaving no room for misinterpretation. It should provide enough detail to guide the AI’s response, including relevant background information when necessary. A well-structured prompt is also easy for the AI to parse, making it more likely to produce a relevant and accurate output. Finally, a good prompt should have a clear goal or desired outcome, ensuring the AI understands what you’re trying to achieve.

By incorporating these characteristics, you can significantly improve the quality of your prompts and get more accurate and relevant responses from the AI.

Examples of Good and Bad Prompts

For instance, consider the following two prompts:

Bad prompt: “Tell me about apples.”

Good prompt: “Provide a comprehensive overview of apples, including their nutritional benefits, popular varieties, and their role in culinary traditions around the world. Structure your response with clear headings and bullet points where appropriate.”

The second prompt is much more likely to yield a detailed, well-structured response that covers multiple aspects of the topic.

For another example, let’s compare two prompts:

Bad prompt: “What is artificial intelligence?”

Good prompt: “Explain the concept of artificial intelligence, its history, and current applications in industries such as healthcare, finance, and education. Provide examples of AI-powered tools and their potential benefits and limitations.”

The good prompt provides more context and specificity, increasing the chances of getting a relevant and informative response.

Understanding the difference between good and bad prompts is crucial for effective AI interactions. By crafting well-structured and clear prompts, you can unlock the full potential of large language models and get more accurate and relevant responses.

Understanding Large Language Models

One of the most critical aspects of mastering AI interactions is understanding how large language models (LLMs) work. These AI systems are trained on vast amounts of text data and can generate human-like text, answer questions, and perform various language-related tasks.

Input-Output Mechanism

Assuming you’re familiar with the basics of LLMs, let’s dive deeper into their input-output mechanism. When you provide a prompt to an LLM, it processes the input text and generates a response based on patterns learned from its training data. This process involves complex algorithms and statistical models that enable the AI to understand the context, intent, and nuances of the input prompt.

The output generated by an LLM is a result of this processing, and it can take various forms, such as text, answers, or even code. The quality and relevance of the output depend on the quality of the input prompt, the AI’s training data, and its algorithms.

Probabilistic Nature

You might be surprised to learn that LLMs operate on a probabilistic basis. This means that the output generated by an LLM is based on probabilities, which can result in varying responses even with the same input prompt. The AI is vitally making educated guesses about the most likely response based on its training data and algorithms.

This probabilistic nature can be both an advantage and a disadvantage. On the one hand, it allows LLMs to generate creative and diverse responses. On the other hand, it can lead to inconsistencies and inaccuracies if not properly managed.

This aspect of LLMs is crucial to understand, as it can impact the reliability and accuracy of the output. By recognizing the probabilistic nature of LLMs, you can take steps to mitigate potential issues and optimize your prompts for better results.

Context Window

There’s another important aspect of LLMs to consider: the context window. This refers to the limited amount of text that an LLM can process and consider at once. The context window determines how much background information or context the AI can use to generate its response.

Understanding the context window is vital, as it can significantly impact the quality and relevance of the output. By providing the right amount and type of context, you can help the AI generate more accurate and informative responses.

Mechanism-wise, the context window is typically limited to a few hundred or thousand characters, depending on the specific LLM architecture. This means that you need to carefully craft your prompts to provide the necessary context within these limitations.

No Real-Time Knowledge

Even with their impressive capabilities, most LLMs have a knowledge cutoff and don’t have access to real-time information unless specifically designed to do so. This means that they may not be aware of very recent events, updates, or changes in various domains.

Another important consideration is that LLMs are typically trained on static datasets, which can become outdated over time. This can lead to inaccuracies or incomplete information if not properly addressed.

Examples of How Context Affects Output

InputOutput interactions with LLMs are highly dependent on the context provided. Let’s consider an example to illustrate this point. Suppose you ask an LLM, “What is the capital of France?” without providing any additional context. The AI will likely respond with “Paris.” However, if you add more context, such as “What is the capital of France in the 18th century?”, the response might be different, as the AI takes into account the historical context.

Examples of how context affects output are numerous, and understanding this aspect is crucial for effective AI interactions. By providing the right context, you can guide the AI to generate more accurate and relevant responses.

Do not forget, mastering the art of prompting requires a deep understanding of LLMs and their mechanisms. By recognizing the input-output mechanism, probabilistic nature, context window, and limitations of LLMs, you can optimize your prompts for better results and unlock the full potential of AI interactions.

The Importance of Prompting Fundamentals

Keep in mind that mastering prompting fundamentals is crucial for several reasons. These basics form the foundation of all effective AI interactions, and neglecting them can lead to subpar results, wasted time, and frustration.

Efficiency

Assuming you’re working with a large language model, a well-crafted prompt can save you time by getting accurate responses faster. This is because a good prompt guides the AI to produce the most valuable and relevant output, reducing the need for multiple iterations and clarifications. A single, well-designed prompt can replace a series of poorly constructed ones, saving you hours of effort.

Moreover, efficient prompting enables you to focus on higher-level tasks, such as analyzing results, identifying patterns, and making informed decisions. By streamlining your AI interactions, you can accelerate your workflow and achieve more in less time.

Accuracy

On the other hand, a poorly constructed prompt can lead to misinterpretation by the AI, resulting in inaccurate or irrelevant responses. This is because the AI is only as good as the input it receives, and a vague or ambiguous prompt can confuse the model. A single misunderstanding can propagate through the entire interaction, leading to a cascade of errors.

Conversely, a well-crafted prompt reduces the chances of misinterpretation by providing clear guidance and context. This ensures that the AI produces accurate and relevant responses, which are imperative for making informed decisions or taking action.

Fundamentals of prompting accuracy involve understanding how to provide clear instructions, define specific goals, and include relevant context. By mastering these basics, you can significantly improve the accuracy of your AI interactions.

Creativity

Now, let’s explore the creative aspects of prompting fundamentals. Understanding how to craft effective prompts unlocks the full potential of AI, enabling you to explore new ideas, generate innovative solutions, and automate complex tasks. A well-designed prompt can inspire the AI to produce novel and valuable responses that might not have been possible otherwise.

Moreover, creative prompting allows you to tap into the AI’s generative capabilities, such as text generation, image creation, or music composition. By providing the right guidance and constraints, you can coax the AI into producing remarkable outputs that surpass human capabilities.

The key to creative prompting lies in understanding how to balance structure and freedom, providing enough guidance to focus the AI’s efforts while allowing it to explore new possibilities.

Problem-Solving

Clearly, effective prompting is imperative for breaking down complex problems into manageable parts. By dividing a problem into smaller, well-defined tasks, you can create a series of prompts that guide the AI to produce a comprehensive solution. This approach enables you to tackle problems that would be impossible to solve manually, leveraging the AI’s scalability and processing power.

Moreover, problem-solving through prompting involves understanding how to identify key variables, define constraints, and prioritize tasks. By mastering these skills, you can develop a systematic approach to tackling complex challenges, making you a more effective problem-solver.

Plus, the ability to break down problems into smaller parts enables you to iterate and refine your approach, adapting to new information and feedback.

Consistency

Prompting fundamentals also play a crucial role in ensuring consistency across different interactions. By developing a standardized approach to crafting prompts, you can guarantee that your AI interactions produce consistent results, regardless of the specific task or context. This consistency is imperative for building trust in the AI’s output, enabling you to rely on its responses for critical decisions.

It’s worth noting that consistency is not just about the AI’s output but also about the input. By using a standardized prompt structure, you can ensure that your inputs are clear, concise, and well-defined, reducing the likelihood of errors or misinterpretation.

In essence, mastering prompting fundamentals is imperative for efficient, accurate, creative, and consistent AI interactions. By understanding the importance of these basics, you can unlock the full potential of AI and achieve remarkable results.

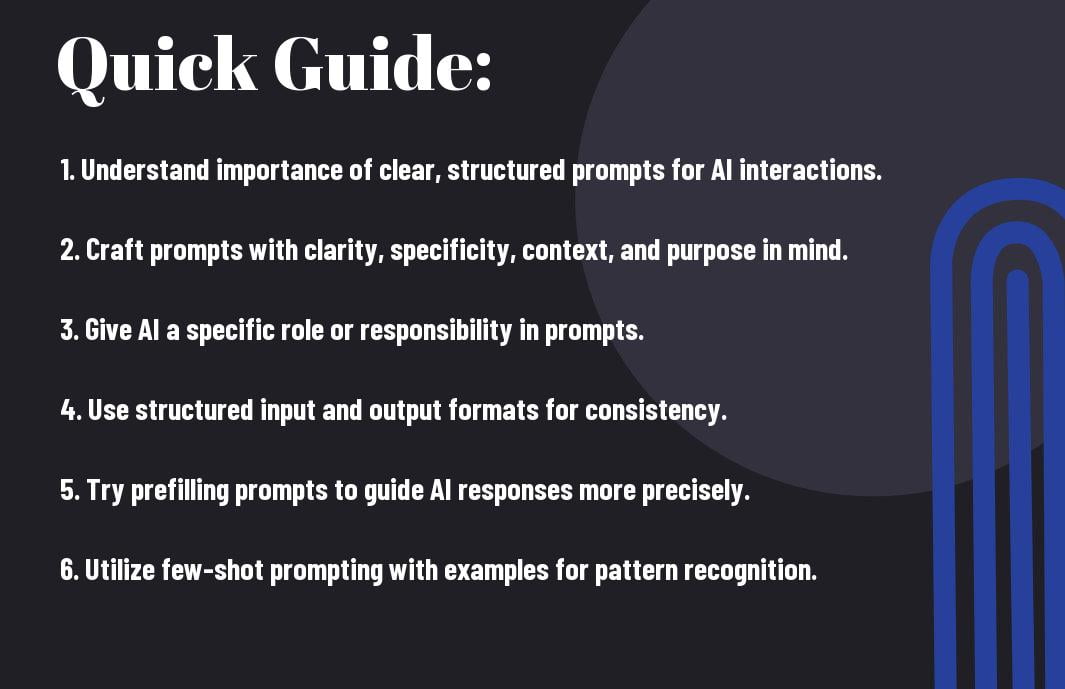

Key Prompting Techniques

Unlike traditional programming, where you write code to achieve a specific outcome, prompting involves crafting input that guides the AI to produce the desired output. In this chapter, we’ll explore crucial techniques to help you master the art of prompting.

Giving the Model a Role or Responsibility

Any successful collaboration starts with clear expectations and roles. When interacting with AI, you can significantly influence the output by assigning a specific role or responsibility to the model. This technique helps the AI understand the context and tone of the response, leading to more accurate and relevant results.

By giving the model a role, you’re crucially telling it how to think and respond. For instance, you might ask the AI to assume the role of a technical writer, a financial expert, or a creative storyteller. The key is to provide enough context and guidance to help the AI understand the expectations and boundaries of that role.

In the next section, we’ll explore another fundamental technique: structured input and output.

| Technique | Description |

|---|---|

| Giving the Model a Role or Responsibility | Assign a specific role or responsibility to the AI to influence its output and tone. |

Structured Input and Output

Step-by-step, you can create more efficient and effective interactions with AI by using structured input and output. This technique involves specifying the format and organization of the input and output, making it easier for both you and the AI to understand and work with the data.

By using structured input and output, you can reduce errors, improve consistency, and increase the accuracy of the AI’s responses. This technique is particularly useful when building applications or automations that rely on AI-generated content.

For example, you might ask the AI to provide information in a specific format, such as JSON or XML, or to follow a particular structure for its response. By doing so, you can ensure that the output is easy to parse and integrate into your workflow.

Additionally, structured input and output can help you to:

- Reduce errors: By specifying the format and organization of the input and output, you can minimize errors and inconsistencies.

- Improve consistency: Structured input and output ensure that the AI’s responses follow a consistent format, making it easier to work with the data.

- Increase accuracy: By providing clear guidance on the format and organization of the output, you can increase the accuracy of the AI’s responses.

Role-based prompting and structured input and output are just the beginning. In the next section, we’ll explore another powerful technique: prefilling.

Prefilling

Output-oriented, you can guide the AI’s response more precisely by providing the beginning of the output you’re looking for. This technique, known as prefilling, helps the AI understand the tone, style, and format of the desired response.

By prefilling the response, you’re crucially giving the AI a head start on generating the output. This can be particularly useful when you need the AI to produce a specific type of content, such as a report, an article, or a story.

Prefilling can also help you to:

- Save time: By providing the beginning of the response, you can save time and effort in guiding the AI to produce the desired output.

- Improve accuracy: Prefilling helps the AI understand the context and tone of the response, leading to more accurate and relevant results.

In the next section, we’ll explore into another advanced technique: few-shot prompting.

Few-Shot Prompting

Advanced users, few-shot prompting involves providing the AI with a few examples of the type of response you’re looking for, which can help it understand the pattern and produce similar outputs.

By providing 2-3 examples of input-output pairs, you can guide the AI to generate responses that follow a specific pattern or structure. This technique is particularly useful when you need the AI to produce high-quality content that meets specific criteria or follows a particular format.

Few-shot prompting can also help you to:

- Improve consistency: By providing examples of the desired output, you can ensure that the AI’s responses follow a consistent pattern and structure.

- Increase accuracy: Few-shot prompting helps the AI understand the context and tone of the response, leading to more accurate and relevant results.

In the final section of this chapter, we’ll explore another powerful technique: chain of thought.

Chain of Thought

One of the most effective ways to interact with AI is to think step-by-step, breaking down complex tasks into manageable parts. This technique, known as chain of thought, involves guiding the AI through a series of logical steps to produce a coherent and well-structured response.

By using chain of thought, you can:

- Improve clarity: Breaking down complex tasks into smaller steps helps to clarify the thought process and ensures that the AI understands the context and requirements.

- Increase accuracy: Chain of thought helps the AI to produce more accurate and relevant responses by guiding it through a series of logical steps.

Now that we’ve covered the key prompting techniques, you’re ready to start experimenting with AI interactions. Remember to practice and refine your skills, and don’t be afraid to try new approaches and techniques.

Factors Affecting Prompting Outcomes

Now, let’s explore the key factors that can significantly impact the outcomes of your AI interactions.

When crafting prompts, it’s vital to consider the following elements, as they can either enhance or hinder the effectiveness of your interactions:

- Model Size and Complexity

- Training Data Quality

- Context and Background Information

- User Intent and Goal

Model Size and Complexity

You may have noticed that larger language models tend to produce more comprehensive and accurate responses. This is because they have been trained on vast amounts of text data, allowing them to recognize patterns and relationships more effectively.

However, model size and complexity can also lead to increased computational resources, slower response times, and a higher risk of overfitting or memorization. When working with larger models, it’s crucial to balance their capabilities with the specific requirements of your task or application.

Training Data Quality

There’s no denying that the quality of the training data has a direct impact on the performance of a large language model. High-quality training data can result in more accurate and informative responses, while low-quality data can lead to biased or misleading outputs.

Understanding the characteristics of the training data, such as its scope, diversity, and potential biases, is vital for crafting effective prompts and achieving desired outcomes.

In addition, it’s vital to recognize that training data quality can influence the model’s ability to generalize to new, unseen scenarios. By acknowledging these limitations, you can design prompts that take into account the model’s strengths and weaknesses.

Context and Background Information

Assuming you’re working with a large language model, providing context and background information can significantly enhance the accuracy and relevance of its responses.

This context can include details about the topic, the intended audience, or the specific goals and objectives of the interaction. By incorporating this information into your prompts, you can guide the model to produce more targeted and informative outputs.

Complexity arises when dealing with ambiguous or open-ended contexts, where the model may struggle to disambiguate or prioritize relevant information. In such cases, it’s crucial to provide clear guidance and constraints to help the model navigate these complexities.

User Intent and Goal

Training a large language model to understand user intent and goal is critical for achieving successful outcomes. This involves not only recognizing the user’s explicit requests but also inferring their implicit needs and preferences.

Affecting the model’s understanding of user intent and goal can be achieved by incorporating subtle cues, such as tone, language, and context, into your prompts. By doing so, you can encourage the model to produce responses that are more aligned with the user’s underlying objectives.

Thou shalt consider these factors when crafting prompts, lest thy interactions with AI models yield suboptimal results.

Tips for Effective Prompting

Once again, crafting effective prompts is crucial to getting the most out of your AI interactions. Here are some tips to help you master the art of prompting:

- Be Clear and Concise: A clear and concise prompt helps the AI understand what you’re asking and reduces the likelihood of misinterpretation.

Be Clear and Concise

Crisp and focused language is important in prompting. Avoid using vague or open-ended prompts that may confuse the AI. Instead, strive for clarity and specificity in your requests. This will help the AI provide more accurate and relevant responses.

Note, a clear prompt is one that is easy to understand and free from ambiguity. Take the time to craft your prompts carefully, and you’ll be rewarded with better results.

Use Specific Language and Terminology

Prompting with specific language and terminology helps the AI understand the context and nuances of your request. This is particularly important when working with domain-specific knowledge or technical topics.

Using specific language and terminology also helps to reduce the chances of misinterpretation and ensures that the AI provides responses that are relevant to your needs.

Effective language models can recognize and respond to specific terminology, so don’t be afraid to use technical jargon or domain-specific language in your prompts. This will help you get more accurate and informative responses.

Provide Relevant Context and Background

Providing relevant context and background information helps the AI understand the broader picture and provide more informed responses. This is especially important when working with complex topics or nuanced requests.

Take the time to provide any relevant context, definitions, or background information that may be necessary for the AI to understand your request. This will help ensure that the AI provides responses that are accurate, relevant, and informative.

For instance, if you’re asking the AI to analyze a complex data set, providing context about the data, its sources, and any relevant assumptions can help the AI provide more accurate and meaningful insights.

Define the Task or Goal Clearly

Clearly defining the task or goal helps the AI understand what you’re trying to achieve and provides a clear direction for its response.

A well-defined task or goal helps to focus the AI’s attention and ensures that its response is relevant and meaningful. Take the time to clearly articulate what you’re trying to achieve, and you’ll be rewarded with better results.

Background information, such as the purpose of the task or the desired outcome, can also be helpful in providing context and guiding the AI’s response.

Use Structured Input and Output

Using structured input and output helps to organize and format the data in a way that’s easy for the AI to understand and process.

Clearly specifying the structure of the input and output helps the AI to provide responses that are consistent, well-organized, and easy to parse.

Output formats, such as JSON or XML, can be particularly useful when working with data-intensive tasks or building applications that require structured data.

Output structures can also help to ensure consistency across multiple interactions, making it easier to integrate the AI’s responses into your workflow or application.

Knowing these tips and techniques will help you craft effective prompts that get the most out of your AI interactions. By being clear, concise, and specific, you can unlock the full potential of AI and achieve better results.

Pros and Cons of Different Prompting Techniques

After understanding the various prompting techniques, it’s crucial to weigh their advantages and disadvantages to choose the most suitable approach for your specific use case.

| Prompting Technique | Pros and Cons |

|---|---|

| Giving the Model a Role or Responsibility | Pros: Encourages creative and context-specific responses, helps AI understand the desired tone and expertise. Cons: May lead to overly creative or inaccurate responses if not properly constrained. |

| Structured Input and Output | Pros: Simplifies parsing and integration of AI output, enables automation and scalability. Cons: May limit the AI’s ability to provide novel or creative responses. |

| Prefilling | Pros: Guides the AI’s response to ensure consistency and relevance, saves time by providing a starting point. Cons: May lead to overly structured or formulaic responses if not balanced with creative freedom. |

| Few-Shot Prompting | Pros: Enables the AI to learn from examples and generalize to new inputs, reduces the need for extensive training data. Cons: May require a large number of high-quality examples, and the AI may not always understand the underlying pattern. |

| Chain of Thought | Pros: Encourages the AI to think step-by-step and provide transparent reasoning, helps identify errors or biases in the response. Cons: May lead to overly verbose or convoluted responses if not properly guided. |

Giving the Model a Role or Responsibility

If you want to encourage creative and context-specific responses from your AI model, giving it a role or responsibility can be an effective technique. By assigning a specific expertise or perspective, you can guide the AI’s output to be more relevant and informative.

For instance, if you’re asking the AI to generate a product description, you could give it the role of a marketing expert with a focus on highlighting the product’s key features and benefits. This approach can lead to more engaging and persuasive responses that resonate with your target audience.

Structured Input and Output

Model your input and output structures carefully to ensure seamless integration with your application or workflow. By specifying the format and content of the AI’s response, you can simplify parsing and processing, making it easier to automate tasks and scale your operations.

For instance, if you’re building a chatbot that provides customer support, you could use structured input and output to ensure that the AI’s responses are concise, informative, and easy to understand. This approach can lead to faster resolution times and higher customer satisfaction.

Prefilling

With prefilling, you provide the AI with a starting point for its response, which can help guide its output and ensure consistency. By giving the AI a head start, you can save time and effort while still achieving your desired outcome.

Techniques like prefilling can be particularly useful when working with complex or open-ended tasks, where the AI may struggle to generate a response from scratch. By providing a foundation for the response, you can help the AI build upon it and produce a more comprehensive and accurate output.

Few-Shot Prompting

Clearly, few-shot prompting is a powerful technique that enables the AI to learn from examples and generalize to new inputs. By providing a few high-quality examples, you can teach the AI to recognize patterns and relationships, allowing it to produce similar outputs for new inputs.

Another advantage of few-shot prompting is that it can reduce the need for extensive training data, making it a more efficient and cost-effective approach. However, it’s crucial to ensure that the examples you provide are relevant, accurate, and diverse, as this will directly impact the quality of the AI’s responses.

Chain of Thought

For more complex or abstract tasks, using a chain of thought approach can help the AI think step-by-step and provide transparent reasoning. By breaking down the task into smaller, more manageable components, you can guide the AI’s response and ensure that it’s logical and coherent.

Output from a chain of thought approach can be particularly useful when you need to understand the AI’s decision-making process or identify potential errors or biases. By seeing the AI’s thought process, you can gain insights into its strengths and weaknesses, allowing you to refine your prompts and improve the overall quality of the responses.

Conclusion

Ultimately, mastering the art of prompting is crucial for unlocking the full potential of large language models. By understanding the characteristics of a good prompt, the inner workings of LLMs, and key prompting techniques such as giving the model a role or responsibility, structured input and output, prefilling, few-shot prompting, and chain of thought, you’ll be able to harness the power of AI to achieve your goals. Do not forget, effective prompting is not just about asking the right questions, but also about providing the right guidance and context to get the most accurate and relevant responses.

As you continue to explore the world of AI interactions, keep in mind that prompting fundamentals form the foundation of all effective interactions. By applying the techniques and strategies outlined in this comprehensive guide, you’ll be able to streamline your workflow, unlock creative uses of AI, and solve complex problems with ease. So, take the first step in mastering the art of prompting, and discover the incredible possibilities that await you in the world of AI.

FAQ

Q: What is the purpose of “Prompting Fundamentals: A Comprehensive Guide to Mastering AI Interactions”?

A: This comprehensive guide aims to equip readers with the knowledge and techniques to effectively communicate with large language models (LLMs), enabling them to harness the power of AI and get the most out of their interactions.

Q: What makes a good prompt?

A: A good prompt is clear, specific, and well-structured, providing enough context and guidance for the AI to produce a valuable and relevant output. It should have a clear goal or desired outcome and include relevant background information when necessary.

Q: How do Large Language Models (LLMs) work?

A: LLMs take text input (a prompt) and generate text output based on patterns learned from their training data. They operate on a probabilistic nature, meaning results can vary even with the same input. They also have a limited context window and typically don’t have access to real-time information unless specifically designed to do so.

Q: Why is mastering prompting fundamentals important?

A: Mastering prompting fundamentals is crucial for efficiency, accuracy, creativity, problem-solving, and consistency in AI interactions. It enables users to get accurate responses faster, reduce misinterpretation, unlock creative uses of AI, break down complex problems, and ensure consistent results across different interactions.

Q: What are some key prompting techniques?

A: Some crucial prompting techniques include giving the model a role or responsibility, using structured input and output, prefilling, few-shot prompting, and chain of thought. These techniques can significantly influence the AI’s output and help users achieve their desired goals.